A Prototype of Repositioning Using Aruco Tags

Every year, our team use an odometry-based positioning system to determine the robot’s position. This system, although it is relatively precise, has at least two drawbacks that are well known by participants of the France robotics cup:

- Positioning error accumulates with time.

- If the robot slides, wheels’ coders measure a movement that does not correspond to reality.

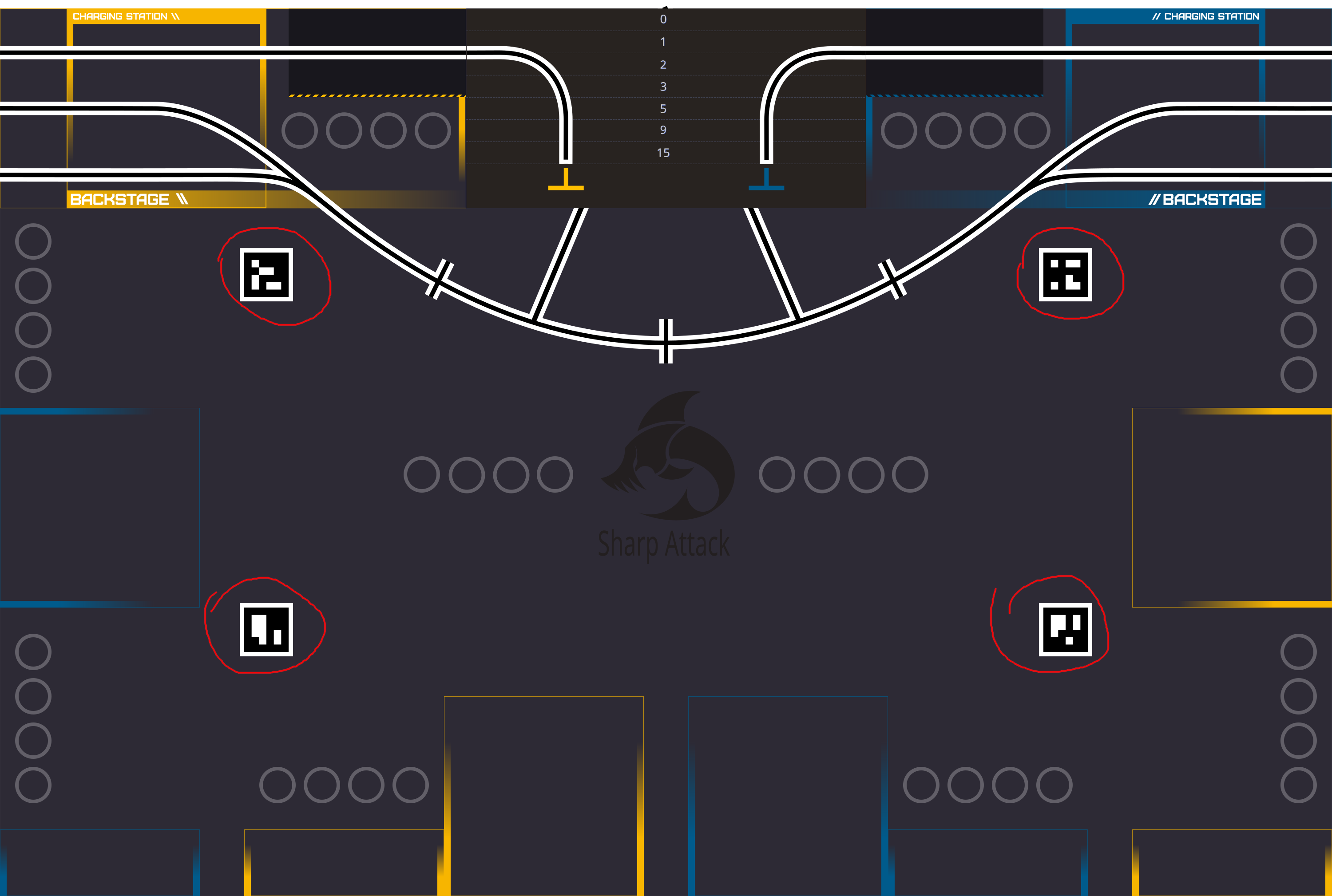

We already discussed a lidar-based positioning system before, but the difficulty to track beacons led to us exploring new options. During the inter-UT week, we decided to experiment a solution allowing repositioning using static arucos on the terrain. There are 4 arucos on the terrain as shown in red below:

Teams can also add aruco tags on their beacons / remote computing device (as long as these tags are in the authorized ranges stated by the rules).

This short article does not aim to present a complete solution, but rather discusses our experience during the creation of a first prototype.

Proposed solution

Our first idea was to achieve repositioning using the known absolute position of aruco tags on the terrain. Seeing an aruco, the robot can use its position and orientation to position itself.

While this solution seems interesting, it has two drawbacks:

- We need to know the position of arucos in the global frame of the terrain, which forces us to maintain a configuration with these positions. Furthermore, we have to work in the global terrain frame, but our robot only has knowledge of its own frame (the origin of this frame being its starting point on the terrain).

- Manufacturing tolerances of the table are added to measurement uncertainties.

We thus opted for a different tactic: When the robot observes a static aruco tag for the first time, it saves its position at that time using odometry. We suppose that odometry did not drift too much yet, and we take its pose estimation as ground truth. When observing the same aruco tag at a next point in time, the robot will compute its position again. The difference between this new position and the old one will let us appreciate the odometry drift and infer the real position of the robot. This solution bypasses the previously mentioned drawbacks, but suppose that the position estimated by odometry is perfect when observing an aruco tag for the first time, which is if course an approximation.

Detecting and Positioning Arucos in Space

To confer vision to our robot, we use an OAK-D Lite stereo camera, that allows us to retrieve depth information in addition to a 2D image.

Our sharpsight software is tasked with publishing on the network the 3D positions of detected aruco tags, so that the card controlling the robot has access to them. We detect the aruco tags on the camera images using the Python bindings of the famous OpenCV library. The depth information of the camera allows us to determine the 3D position of the arucos in the camera frame. At this point, we filter anomalous observations (for example, the camera sometimes report aruco tags with a position of (0,0,0) by mistake).

When the aruco tag is positionned in the camera frame \(C\), we apply a change of basis to transform its coordinates to the local frame \(L\) of the robot, of which the origin correspond to the odometric center of the robot:

\[x_{L} = Rx_{C} + t,\]where \(R\) represents a matrix where columns are the coordinate of the basis vector of the camera frame in the local robot frame, and \(t\) the position of the camera in the local frame of the robot. Finally, we publish this information on the network.

Repositioning

The robot control card periodically receive aruco positions in the local robot frame. Each time the control task is called (at a frequency of 200Hz), we apply the following (purposely naive and simplified) algorithm:

def get_pose_from_arucos(aruco_db, odometry):

# get the observed aruco tags from the network

arucos = retrieve_arucos()

# update the database of seen aruco tags (aruco_db) if necessary,

# by saving the position of arucos seen for the first time

for aruco in arucos:

if not aruco in aruco_db:

aruco_db = save_aruco(aruco_db, aruco)

# retrieve the aruco tag that was saved first (its position should

# be more accurate)

aruco = min(arucos, key=aruco_db.get_save_time)

# we deduce our orientation from the aruco orientation

saved_pose = aruco_db.saved_pose(aruco)

Δθ = aruco.θ - saved_pose.θ

robot_θ = saved_pose.odometry.θ - Δθ

# transform the position of the aruco in the global frame of the

# robot using change of basis

R = [[cos(robot_θ), -sin(robot_θ)], [sin(robot_θ, cos(robot_θ))]]

aruco_pose = R @ aruco.pose + odometry.xy

# update the robot's pose with the difference between the current

# and first observation of the aruco tag position

robot_x = odometry_x - (aruco_pose.x - saved_pose.x)

robot_y = odometry_y - (aruco_pose.y - saved_pose.y)

return robot_x, robot_y, robot_θ

Experimental Measurements and Conclusion

We observe above, on a test with a short movement, the pose estimation difference between odometry and our aruco-based method1. We can suppose that odometry does not drift on such a short movement. When the robot is stopped, measurements looks similar. The aruco-based estimation varies even when the robot is static, but is still relatively stable: we observe a range of 4mm when measuring positions. When moving, our technique does not estimate position correctly, and we also observe latency. This latency is at least partially due to the lower sampling frequency of the camera compared to odometry, while the pose estimation issue might come from the quality of captured images.

The pose estimation problem is confirmed when observing the 2D trajectories of the robot predicted by odometry and by our aruco-based technique: the estimation seems correct when the robot is stopped, but completely unusable when moving. To conclude, we will have to either fix this issue or only use our aruco-based technique when the robot is static.

-

The temporal axis is not completely faithful to reality, as each measurement correspond to the robot observing an aruco tag, but it did not always observe an aruco during the test. ↩